Control over “Magic Prompts”: Practical Techniques and Lessons from the Day

Explore practical techniques for harnessing AI with "Magic Prompts." Discover how to control AI responses, ensure quality, and use multi-model strategies for improved outcomes. Learn to create effective backups and solidify your development process!

I sat down to read a book in Spanish and thought: “I need a tool for this. I’ll whip it up right now — nothing fancy, just a script and a prompt.” It ended up taking seven hours. I’m still not entirely sure where the time went.

Session plan

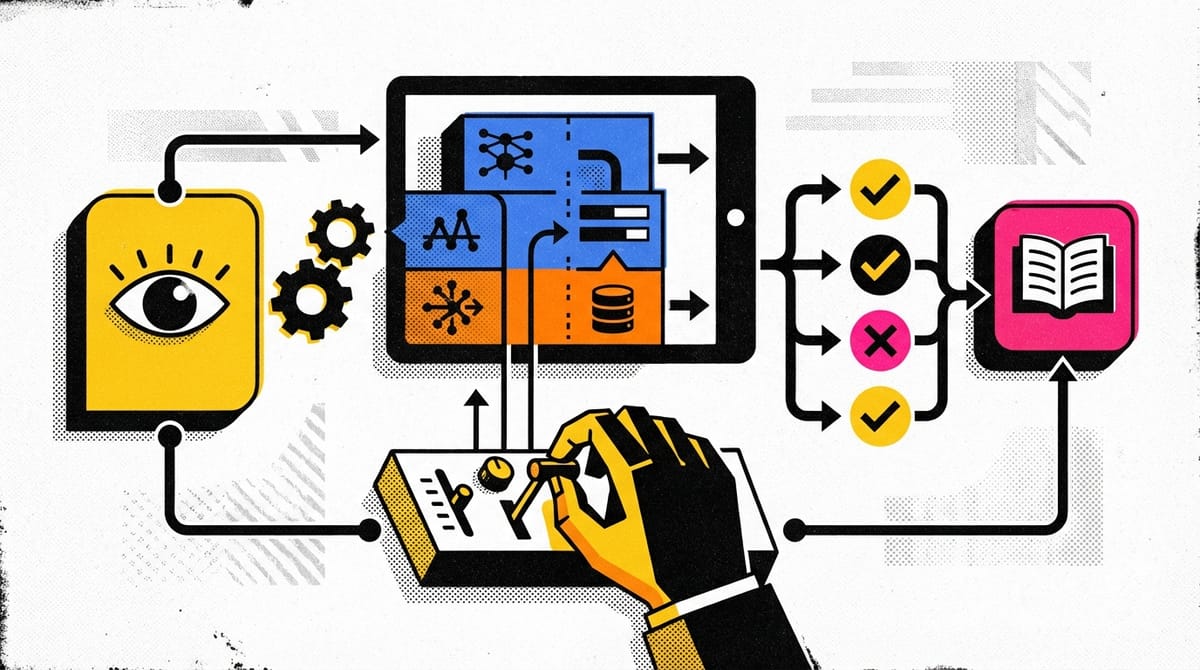

I wanted to add a Reading Module to my language tutor — a module that helps break down phrases from books. It seemed simple: write one prompt module, lightly refine the methodology, and connect the subsystem call to the main bot control system. In parallel, save results to PostgreSQL for analysis.

I did a mini‑review: took three different approaches to grammar explanations, filtered out the excess, and assembled a final version. This is v1 — I expect to refine it over time.

How it actually went

I always ask the model to confirm and narrate the plan first, but sometimes Claude Code surprises me. I asked for a subsystem with a single script — and got a broken bot control system instead. Claude Code decided to replace the core with this mini‑module. The mini‑module turned out not to be mini. Then it started running without my approval, and I couldn’t stop it in time. Suddenly I’m cycling through Docker Compose setups and restoring systems from scratch — some from a backup branch, some fresh. Fun times.

Notable moments

Moment 1. “Evidence” that Sonnet 4.5 exists

The strangest glitch in a while. Claude Code suddenly concluded that my API “plan” only allows Haiku and not Sonnet 4.5. It took many iterations to:

- Convince the model that “plans” don’t exist in that sense (Anthropic exposes a single API).

- Remove the hard‑coded model name from the code.

- Bring in “evidence” from another project (resume database) where Sonnet works.

- Add auto‑detection of available models.

At one point I was literally pasting logs from another service: “Look, here’s a successful request to claude‑sonnet‑4‑5‑20250929!” After that I re‑checked — and everything worked. I still don’t know what happened “in the AI’s head,” but it was a lesson about the value of proof‑of‑concepts in real systems.

Moment 2. Prompting as quality control

I don’t have “magic prompts that always work.” What I do have is strict control every time:

- “Explain in your own words what you understood from the task.”

- “Which files are you going to change and why?”

- “Repeat the action plan before executing.”

Today, using Claude desktop in parallel for isolated parts helped a lot. For example, I asked Opus to evaluate the quality of pedagogical explanations — it gave recommendations that I then implemented through Claude Code.

Thoughtful model‑switching beats trying to “squeeze everything” from one model:

- Haiku — for quick tasks

- Sonnet — for development

- Opus — for critical review

Phrases of the day that worked

When the database wasn’t saving anything

We need to reconcile the AI’s response and the DB. The AI is “more right”

because it explains precisely each time.

The DB is “less right” because we can’t anticipate every possible response shape.

We need to reconcile them and rethink our data structure. What are your proposals?This wording pushed the AI to look at the problem systemically instead of patching holes.

For updating documentation

analyze the session and update it surgically The word “surgically” set the right tone: precise edits instead of rewriting the whole document.

Can’t live without this now — and you shouldn’t either

create a backup branch and push it to GitHub, name: V0.5.5-reading-SQLProfessional developers do this automatically, but for me it was a genuine “aha”: create backup branches after every meaningful milestone.

Takeaways

- Real evidence beats arguments. When the AI insisted the model was unavailable, only logs from another project helped.

- Backup branches save the day. Create GitHub backup branches after every key milestone. Today’s V0.5.5‑reading‑SQL is a safe restore point if things go sideways.

- Control beats “magic prompts.” Make the AI restate the task in its own words before execution — misunderstandings surface early.

- Multi‑model mode is stronger. The Haiku → Sonnet → Opus combo produces better results than relying on a single model.