Manually cleaning data

Master manual data cleaning with proven strategies! Learn how to verify accuracy and build trust in your data analysis process.

Verify and report results

- Verification of data is crucial to ensure the data cleaning process has been executed correctly and the data is accurate and reliable.

- Verification allows for the identification and rectification of errors before beginning analysis, ensuring the insights gained can be trusted for decision-making.

- Reporting on the data cleaning process promotes transparency, builds trust within the team, and ensures all stakeholders are informed about important project details.

- Changelogs, which are files containing a chronologically ordered list of modifications made to a project, are useful tools for tracking a dataset's evolution and for communicating and reporting data to others.

Confirm data-cleaning meets business expectations

See the big picture when verifying data-cleaning

- Consider the business problem

- Consider the goal

- Consider the data

- Verification is a crucial step in any data analysis project, ensuring the reliability and accuracy of the cleaned data.

- Verification involves comparing the original unclean data set with the cleaned one and identifying and correcting any persisting issues.

- A big-picture perspective is essential during verification. It reaffirms the alignment of the project with the original business problem and goals.

- Considering the source of the data and testing the data collection and cleaning processes are crucial to ensure the data can solve the problem and meet the project objectives.

- Seeking feedback from teammates and scrutinizing any suspicious or potentially problematic data can help identify overlooked issues.

- Verification safeguards the trustworthiness of the insights gained from the analysis, helping companies avoid significant mistakes.

Example 1: Verify data with spreadsheets

Use spreadsheet tools such as Find and Replace and pivot tables to find, understand, and fix errors in your spreadsheet.

Use a pivot table to understand errors in a spreadsheet

- Select the XXX column.

- Select Insert > Pivot Table. In the Create pivot table dialog box, choose New Sheet then Create.

- This creates a new tab that is mostly blank.

- Additionally, the Pivot table editor pane is in the window.

- Next to Rows. Select Add, then the XXX column.

- Next to Values, select Add then select XXX. This adds a value for the XXX column.

- By default, Google Sheets sets the value to summarize by COUNTA (the total number of values in a range). This will show how many times each supplier name comes up. It’s a great way to check for misspellings and other anomalies. Note: Don’t use COUNT, because COUNT counts only numerical values.

- When there is only one instance of the misspelled name, manually change it to the correct spelling.

- To return to the original sheet, select the Sheet1 tab.

Example 2: Use a CASE statement to verify data in SQL

Use CASE statements to correct misspellings in SQL.

- The SQL table used in this example is not available for download, but if you were performing a similar query you’d first make sure to load the data in BigQuery.

- Start your SQL query with the basic structure:

SELECT

FROM

WHERE

- In the FROM clause, specify the table you're pulling data from after FROM. For example, project-id.customer_data.customer_name

- In the SELECT clause, specify the columns you want to return. In this example, you want customer_id and first_name.

- However, there is a misspelling in a customer’s first name.

- To correct the misspelled name "Tnoy" to "Tony", use a CASE statement.

Enter CASE. On the next line, enter WHEN first_name = 'Tnoy'THEN 'Tony'. This tells SQL to replace any instances of Tnoy in the first_name column with Tony.

On the next line, add the statement ELSE first_name to keep other names as they are.

End the statement with END AS cleaned_name.This creates a new column called cleaned_name that will contain the data cleaned with the CASE statement.

Delete the WHERE clause because you don’t want to filter the query.

The final statement should be:

SELECT

Customer_id,

CASE

WHEN first_name = 'Tnoy' THEN 'Tony'

ELSE first_name

END AS cleaned_name

FROM

project-id.customer_data.customer_name

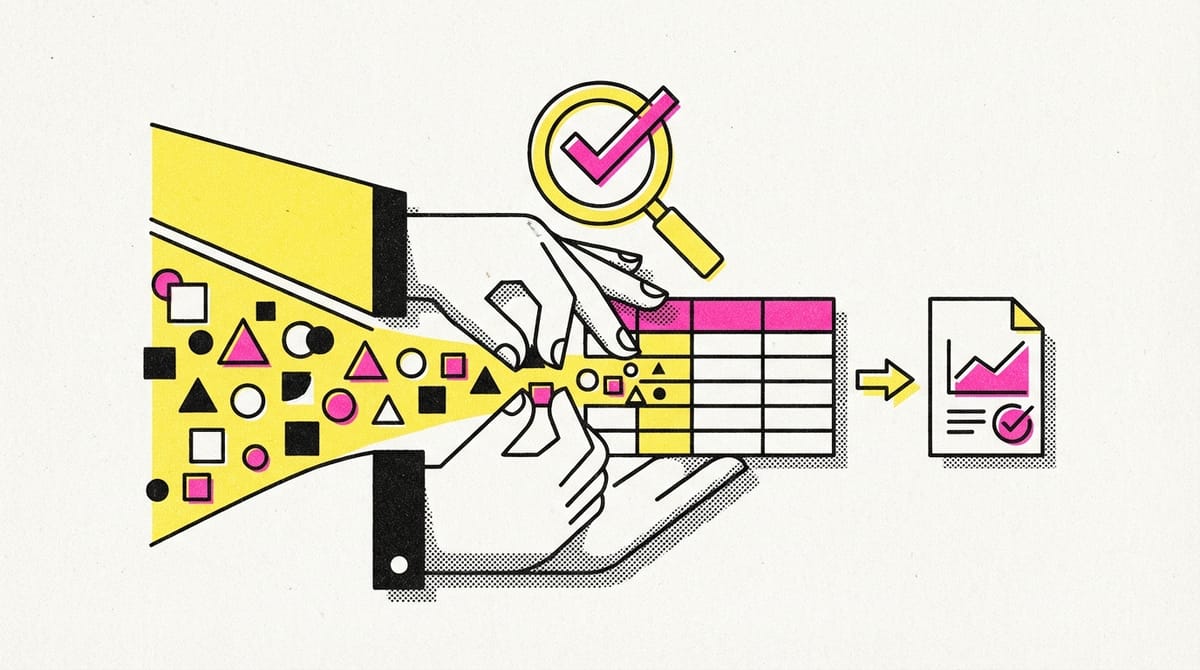

Verification of data cleaning

- Verification of data cleaning is crucial to ensure the accuracy and reliability of data.

- The process includes comparing the cleaned data to the original, unclean dataset and looking for common problems.

- Manual cleaning such as eliminating extra spaces or removing unwanted marks can be done.

- Spreadsheet tools like TRIM and remove duplicates can help fix common errors automatically.

- Using Find and Replace can help correct misspellings or errors throughout the dataset.

- In SQL, CASE statements can be used to handle misspellings or errors.

Data-cleaning verification checklist

Correct the most common problems

Make sure you identified the most common problems and corrected them, including:

- Sources of errors: Did you use the right tools and functions to find the source of the errors in your dataset?

- Null data: Did you search for NULLs using conditional formatting and filters?

- Misspelled words: Did you locate all misspellings?

- Mistyped numbers: Did you double-check that your numeric data has been entered correctly?

- Extra spaces and characters: Did you remove any extra spaces or characters using the TRIM function?

- Duplicates: Did you remove duplicates in spreadsheets using the Remove Duplicates function or DISTINCT in SQL?

- Mismatched data types: Did you check that numeric, date, and string data are typecast correctly?

- Messy (inconsistent) strings: Did you make sure that all of your strings are consistent and meaningful?

- Messy (inconsistent) date formats: Did you format the dates consistently throughout your dataset?

- Misleading variable labels (columns): Did you name your columns meaningfully?

- Truncated data: Did you check for truncated or missing data that needs correction?

- Business Logic: Did you check that the data makes sense given your knowledge of the business?