Obtaining Data

Learn how to effectively obtain data using the OSEMN model, enhancing your analytics skills and driving informed decision-making.

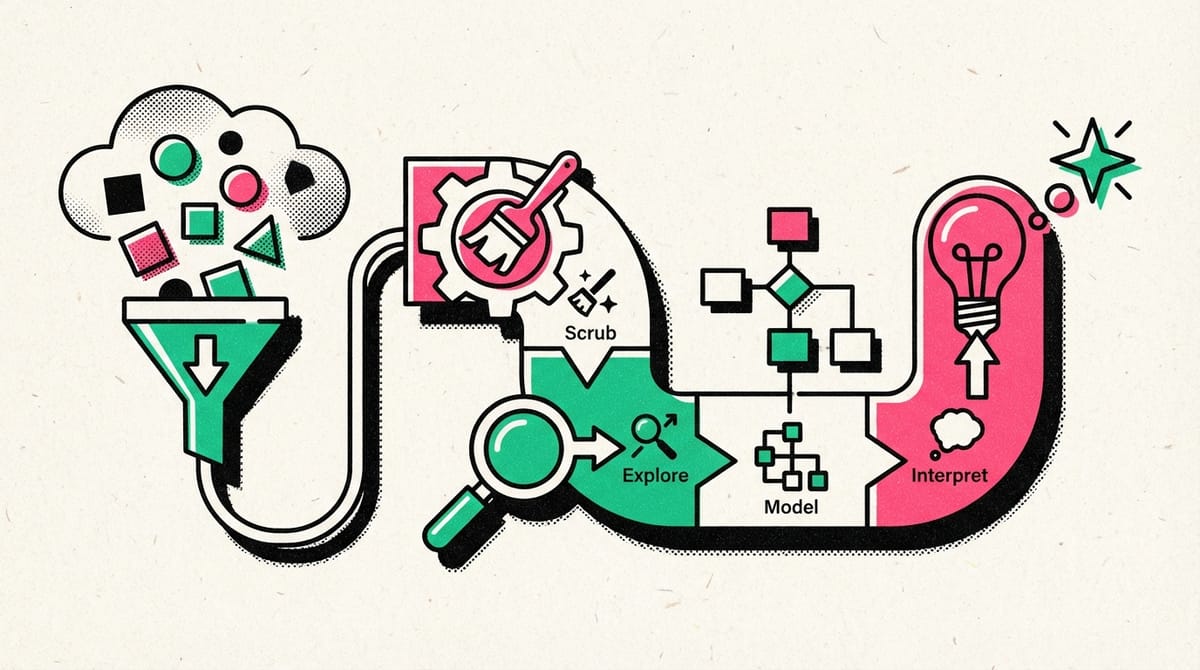

- The OSEMN model is a framework for data analytics projects, consisting of five stages: Obtain, Scrub, Explore, Model, and Interpret.

- Obtaining data involves gathering relevant information from various sources, which may require creativity and research.

- Scrubbing data is crucial for preparing the dataset, including cleaning and formatting to ensure accuracy and usability.

- Exploring data involves searching for patterns, trends, and anomalies through categorization and basic visualizations.

- Modeling uses statistical or mathematical approaches to generate predictions and insights from the data.

- Interpreting results includes creating visualizations, stories, and presentations to communicate findings effectively.

- The OSEMN framework provides a structured approach to data analytics projects, helping to break down complex tasks into manageable steps.

It's a Data World

- Data Analytics Definition: The process of collecting, cleaning, organizing, analyzing, and interpreting data to uncover insights and make informed decisions.

- OSEMN Framework: An approach to data analytics projects that stands for Obtain, Scrub, Explore, Model, and iNterpret (pronounced as "awesome").

- Data Collection: Gathering relevant and accurate data from various sources is crucial for analysis.

- Data Cleaning: Removing duplicates, inconsistencies, and errors to ensure data accuracy.

- Data Organization: Sorting and categorizing data to identify trends and patterns quickly.

- Data Analysis: Utilizing statistical and mathematical methods to uncover insights and relationships within the data.

- Data Interpretation: Presenting findings in an easy-to-understand way, often through visualizations and storytelling.

- Practical Application: Understanding how to apply data analytics in real-world scenarios, such as inventory management or business decision-making.

- Industry-Specific Standards: Recognizing that different industries may have varying priorities and conventions for data analytics.

- Continuous Learning: Embracing inquiry, intellectual discussion, and collaboration to improve data analytics skills and outcomes.

Where to Look for Data

- Data analytics is the process of collecting, cleaning, organizing, analyzing, and interpreting data to uncover insights and make informed decisions.

- There are three main categories of data sources:

- Freely accessible, open-source databases (e.g., government websites, Eurostat, OECD)

- Company-specific data (e.g., sales data, Google Analytics)

- Intentionally collected data (e.g., surveys, interviews, observations)

- When free data sources are unavailable, companies may subscribe to industry-specific databases (e.g., Nielsen data, Bloomberg terminal).

- If the required data isn't available, analysts may need to create a plan to collect it through methods like questionnaires or interviews.

- Tools like SurveyMonkey and Google Forms can be used to create questionnaires for data collection.

- When starting an analytics project, it's important to consider how to obtain the necessary data, whether from free sources, company databases, or through intentional collection.

Common Data Formats

- Data formats vary widely, including numeric, text, and visual data.

- Numeric data is quantitative and can be measured or counted (e.g., age, income, sales figures).

- Text data, also known as unstructured data, includes sources like social media posts, emails, and customer reviews.

- Visual data encompasses images, videos, and geographical maps.

- The choice of data format depends on the specific analytics question being addressed.

- Understanding different data formats is crucial for effective data analysis and presentation.

- Each data format offers unique insights and can be useful depending on the context and goals of the analysis.

Sampled Data

- Definition of Sampled Data: A subset of a larger population or dataset used to represent the entire population.

- Importance of Sampling: It allows for drawing conclusions about a population while analyzing only a fraction of it, saving time and resources.

- Reasons for Using Sampled Data:

- Large population size

- Cost constraints

- Time constraints

- Destructive sampling scenarios

- Considerations for Sample Quality:

- Sample Size: Larger samples generally provide more accurate estimates

- Representativeness: The sample should accurately represent the entire population

- Generalizability: Be aware of limitations when applying findings to other populations

- Potential Pitfalls: Biased samples, small sample sizes, and overgeneralization can lead to inaccurate conclusions.

- Best Practices: Use random sampling methods, ensure adequate sample size, and consider the limitations of the sample when drawing conclusions.

Understanding these concepts is crucial for effective data analysis and interpretation in various fields, including market research, scientific studies, and business analytics.

First and Third Party Data

- First-party data: Data collected directly by a business from its customers or internal sources (e.g., website usage statistics, point-of-sale information, customer surveys).

- Third-party data: Data gathered by outside parties not affiliated with the business (e.g., government census data, market research firm data, economic indicators).

- Source importance: Understanding the source of data is crucial for assessing its validity and reliability.

- Data collection evaluation: Knowing who collected the data allows you to evaluate how it was collected and assess its quality.

- First-party data advantages: Companies have more control over first-party data and know exactly where it came from and how it was collected.

- Third-party data uses: Often used for market research, advertising campaigns, and competitive analysis to gain insights into potential target audiences or other companies.

- Data analysis process: Once you understand the data source, you can begin to evaluate how it was collected and assess its quality for your analysis.

Evaluating the Validity of Data Sources

- Evaluate the credibility of data sources by checking authorship, publication date, and reputation of the organization providing the data.

- Assess the methodology used for data collection, including sample size, sampling methods, and potential biases.

- Ensure the data is objective and free from conflicts of interest that could affect its validity.

- Check the accuracy of the data by comparing it with other reputable sources and looking for obvious errors.

- Verify that the data is relevant to your research question and presented with meaningful context and background information.

- Use a checklist to systematically evaluate data sources before beginning your analysis to ensure you're working with high-quality, reliable data.

- Be prepared to search for alternative data sources if the initial one doesn't meet the criteria for validity and reliability.

Helpful Free Datasources

Google Public Dataset Search

Like Google Scholar, Google Dataset Search provides access to millions of datasets hosted on public websites, such as Kaggle and OGD Platform India, in thousands of locations on the internet.

Link: www.datasetsearch.research.google.com

United States Census Bureau

The United States Census Bureau provides access to quality and essential data about the United States’ population, economy, and geography.

Link: www.census.gov

Pew Research Center

The Pew Research Center provides insights and analysis on a wide range of social, political, and technological issues through surveys and research.

Link: https://www.pewresearch.org/tools-and-resources/

Eurostat

As the European Union's statistical office, Eurostat provides comprehensive economic, social, and environmental data.

Link: www.ec.europa.eu/eurostat

The Organization for Economic Co-Operation and Development (OECD)

The OECD is a reliable source for comparative data and analysis on global economic and social matters.

Link: www.data.oecd.org/united-states.htm

Kaggle Datasets

Kaggle hosts hundreds of thousands of high-quality public datasets from several industries to explore, analyze, and share.

Link: www.kaggle.com/datasets

National Centers for Environmental Information (NCEI)

NCEI is part of NOAA’s Office of Oceanic and Atmospheric Research and provides environmental data regarding climate change and global chemical measurements.

Link: www.ncei.noaa.gov

World Bank Open Data

This comprehensive dataset includes indicators such as population size and unemployment rates collected from hundreds of countries worldwide, offering insights into global economic, social, and environmental trends.

Link: www.data.worldbank.org

Summary: Validity of Data

- Ensure data validity before proceeding to analysis

- Check source credibility by evaluating authorship and publication date

- Examine methodology, including sample size and data collection methods

- Assess objectivity by identifying potential biases and conflicts of interest

- Verify data accuracy through consistency checks and error rate evaluation

- Confirm relevance of data to the research question and its contextual presentation

By following this checklist, you can ensure the quality and reliability of your data, leading to more accurate and meaningful analyses.