Resume as a database: an AI‑assisted experiment

Transform your job search! Discover how to turn your resume into a database with AI for tailored applications and impactful cover letters.

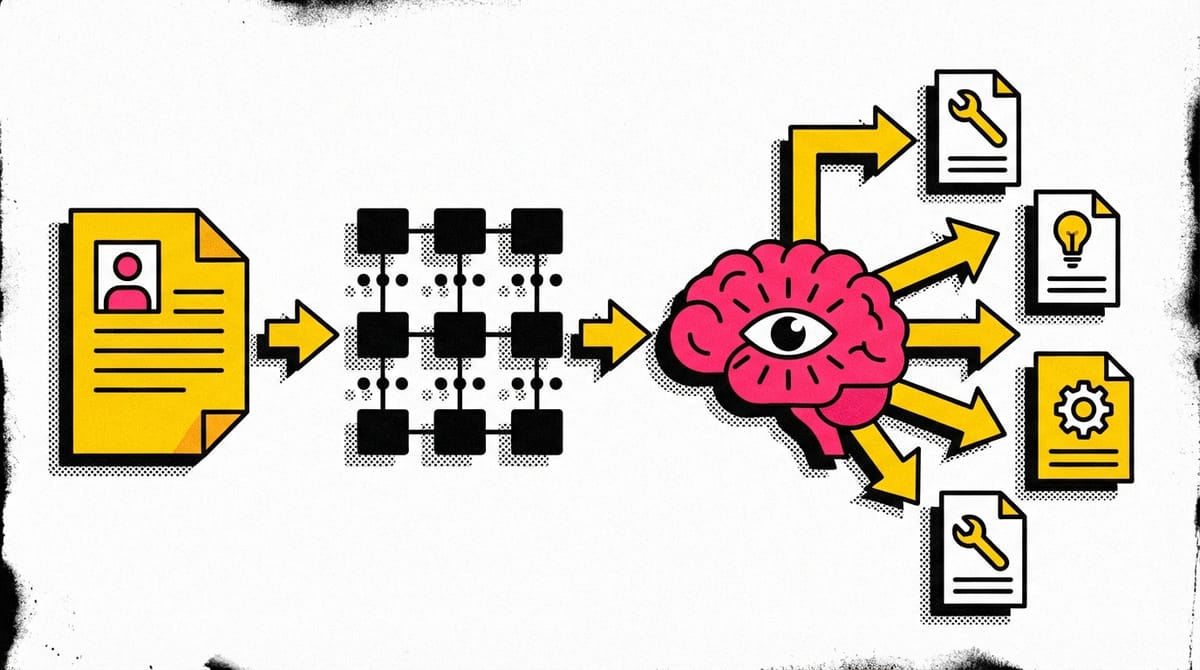

I turned my resume into a JSON database of facts

AI can pick the most relevant wins for each role, rank them, and draft a tailored cover letter

Fewer buzzwords, more proof: concrete metrics and references to real projects

I decided to take my job search seriously and run an experiment with AI. I pulled together 38 achievements using the XYZ method and similar frameworks. I designed a JSON structure so AI can quickly surface the most relevant wins for each role. This is version three — I learned a lot from the first two. Let’s see if this one sticks.

Hypothesis: a resume is a database, not a document

What if a resume isn’t a finished PDF, but a database of facts? Each achievement is a structured record: what happened, how it was done, measurable results, skills involved, and technologies used.

For every application, AI can:

- Pick 10–15 relevant facts from the full set of 39

- Sort them by importance, with the must‑haves on top

- Draft a cover letter that connects my experience to the role’s requirements

- Back up claims with hard numbers from specific projects

Not “I know RAG systems,” but “I built a RAG system that processed 870 medical documents with 95% OCR accuracy and cut search time from 30 minutes to 3 seconds.”

Key numbers at a glance

- 870 documents processed

- 95% OCR accuracy

- Search time: 30 minutes → 3 seconds

- Processing cost for 10 years: $19

What I’m doing right now

Over the past few weeks, I’ve been moving my resume into JSON. Not because it’s trendy, but because it’s the only format I know that is:

- Machine‑readable — AI understands the structure without fragile prompt scaffolding

- Structured — facts don’t bleed into each other, each one stands alone

- Validatable — scripts can check nothing broke along the way

The setup has four levels.

Level 1: Facts (achievements)

Each achievement is its own record. Not “Product Manager for 5 years,” but concrete, measurable outcomes.

Here’s one fact (HealthRAG — a medical document management system):

{

"id": "fact_033",

"type": "achievement",

"company": "KSNK Media",

"period": "2024",

"what": "built AI-powered medical document management system for friend's chronic health condition",

"how": "Integrated Google Document AI for OCR (processing 870 medical documents from 10+ years), Claude 3.5 Sonnet for entity extraction and normalization to LOINC codes, BigQuery for trend analytics, Qdrant for semantic search...",

"results": {

"standard_metrics": {

"users": 1,

"development_time_days": 12,

"lines_of_code": 21000

},

"custom_metrics": {

"documents_processed": 870,

"search_time_before_minutes": 30,

"search_time_after_seconds": 3,

"ocr_accuracy": "95%",

"processing_cost_total": "$19 for 10 years"

}

},

"skills_used": [

"rag_systems",

"llm_integration",

"vector_databases",

"ocr_systems",

"cost_optimization"

]

}

This isn’t prose. It’s structured evidence AI can filter, sort, and combine.

Level 2: Skills

I track 124 skills, each with a category (AI Engineering, Product Management, Leadership) and a level (expert, advanced, intermediate).

The interesting bit is the reverse links. Every skill “knows” where it was used:

{

"telegram_bots": {

"display_name": "Telegram Bots",

"category": "ai_engineering",

"level": "advanced",

"proven_by": [

"fact_033",

"fact_034",

"fact_035",

"fact_036",

"fact_038"

],

"description": "Telegram bot development and API integration for AI interfaces"

}

}

See proven_by? That list is generated automatically. When I add a project that uses Telegram bots, a script updates the array.

It’s a two‑way link: an achievement lists its skills, and a skill lists the achievements that prove it.

Level 3: Priorities

Each fact carries a priority by role. The same project can be crucial for one position and irrelevant for another.

{

"priority": {

"overall_score": 6.7,

"for_roles": {

"AI Product Manager": 10,

"RAG Engineer": 9,

"Data Analyst": 6,

"Content Strategist": 3

}

}

}

The HealthRAG project is a 10/10 for AI Product Manager (RAG, LLM integration, cost optimization), but only a 3/10 for Content Strategist.

Level 4: Metrics (results)

Every achievement tracks two kinds of metrics.

Standard metrics — reusable across projects:

{

"standard_metrics": {

"users": 1,

"team_size": 3,

"development_time_days": 12

}

}

Custom metrics — specific to the work:

{

"custom_metrics": {

"documents_processed": 870,

"search_time_before_minutes": 30,

"search_time_after_seconds": 3,

"cost_per_document": "$0.05–0.15",

"ocr_accuracy": "95%"

}

}

This lets AI pull the right numbers. A posting asks for cost optimization? AI finds cost_optimization, sees it’s proven_by three projects, and surfaces the figures: “$240 → $4 per month,” “$100 per session → $0.25 per month.” Not fluff — facts.

Why bother

With the resume in JSON, AI can do three things a PDF can’t.

1) Filter for relevance

If a role wants “RAG systems” and “multi‑model orchestration,” AI shows only the 6–7 facts where those skills matter. The rest stay out of the way.

2) Rank by importance

Each fact has a role‑specific priority. For “AI Product Manager,” the RAG project comes first (10/10). A hospitality training project falls to the bottom (0/10).

3) Generate evidence

If a role calls for cost optimization, AI can:

- Locate the

cost_optimizationskill in the registry - Check the

proven_byprojects - Pull concrete metrics from each

- Phrase it as: “Cut language tutor cost from $240/month to $4/month (98.3% savings). Reduced AI psychology coach from $100/session to $0.25/month (99.75% savings).”

Not “I optimize costs,” but specific, verifiable numbers.

What already works

Right now I have:

- ✅ 39 achievements fully structured in JSON

- ✅ 124 skills with automatic two‑way links

- ✅ Validation scripts to ensure every skill referenced in achievements exists in the registry

- ✅ Automatic reverse‑link generation (skill → achievements)

- ✅ A priority system that scores relevance for eight roles

What doesn’t work yet:

- ❌ A resume generator (that’s next)

- ❌ Automatic selection of the most relevant facts for a posting

- ❌ A cover‑letter generator that explains the connections

In short, I’m building the “ingredients” — a fact database. Next up is teaching AI to cook with it.

How it works under the hood

There are a few files.

master_resume_v3.json (4,400 lines, 135 KB)

- The source of truth. Includes meta (change history), profile, companies (nine companies with grouped achievements), and achievements (39 facts).

skills_registry.json

- All 124 skills with reverse links. Generated automatically from the source list.

companies_container.json

- Achievements grouped by company. Updates automatically as I add projects.

A set of Python scripts:

- Validate that every entry in

achievements.skills_usedexists inskills_registry - Generate

proven_byarrays for each skill - Recalculate priority scores when I add projects

- Group achievements by company and fill in periods automatically

Everything is versioned in git. Each change is documented in the meta section — what changed, when, and why.

Example meta entry:

{

"meta": {

"ai_projects_portfolio_addition": {

"date": "2025-11-02",

"changes": [

"Added 7 AI personal projects (fact_033 - fact_039)",

"Added 12 new skills: telegram_bots, ocr_systems, multi_model_orchestration...",

"All projects demonstrate RAG systems, vector databases, workflow automation"

],

"skills_updated": {

"total_before": 112,

"total_after": 124

}

}

}

}

Full transparency.

Why I think this can work

Three reasons I’m optimistic.

1) LLMs work well with structure

Claude and GPT‑4 handle JSON beautifully. They can reason over links, filter by criteria, and rank by relevance.

Give AI an unstructured resume and it has to extract facts first (and might get them wrong) before it can reason.

Give AI JSON and the facts are already there — it only needs to filter and combine.

2) The problem isn’t AI, it’s data

Most resume generators lack structured facts, so they produce nice‑sounding filler.

I have specifics: “870 documents,” “95% OCR accuracy,” “$19 to process a decade,” “30 minutes → 3 seconds.” These aren’t invented by AI — they’re real numbers I curated and structured.

Good data in, good writing out.

3) Modularity = flexibility

When facts are atomic, you can mix and match.

For an AI Product Manager role, I surface:

- Six RAG projects with metrics

- Three cost optimization cases

- Two examples of multi‑model orchestration

For a Team Lead role:

- Five projects where I ran teams of 3–40

- Four wins in stakeholder management

- Three crisis‑management cases

For a Content Strategist role:

- Organic growth of 7,000 followers

- 270% engagement lift

- 75+ articles with metrics

One database. Many lenses.

What could go wrong

Plenty.

- ATS might not love AI‑polished resumes

- The structure could be heavier than it’s worth

- Even with good data, AI might still write generic copy

- Some recruiters may prefer imperfect but “human” resumes

Even then, the fact database is useful by itself.

I can see patterns in my own work:

- Of 39 achievements, only six are true RAG projects

telegram_botsshows up in five projects- My strongest recurring theme is Stakeholder Management (ten projects)

- LLM Integration grew from two to eight projects

That’s valuable — it shows where I’m strong and where to grow.

What’s next

Steps from here:

- Build the resume generator

- Input: job posting + my JSON → tailored resume + cover letter

- Test it on real openings

- 20–30 roles across AI PM, Data Analyst, Content Strategist, Team Lead

- Compare response rates

- AI‑generated vs. a conventional resume

- Decide if it works

- If it does, I’ll share the approach and code. If not, I’ll share why and what I learned.

Either way, it’ll be worth it.

Wrapping up

Right now I’m in the “structure the data” phase. Laying the groundwork.

Next, I want AI to turn those ingredients into resumes that actually hit the mark.

I believe the future isn’t one resume you send everywhere, but an adaptive system that shows the right evidence for each application. With proof. With numbers. With clear links between facts.

Maybe it works. Maybe it doesn’t.

But without trying, I won’t know.

Current project status:

- ✅ Data structure is ready

- ✅ 39 achievements structured

- ✅ 124 skills with two‑way links

- ✅ Validation scripts in place

- ⏳ Resume generator in progress

Stay tuned.