Scrubbing Data

Learn how to scrub data effectively to enhance accuracy and reliability in your analysis. Transform dirty data into clean, usable insights!

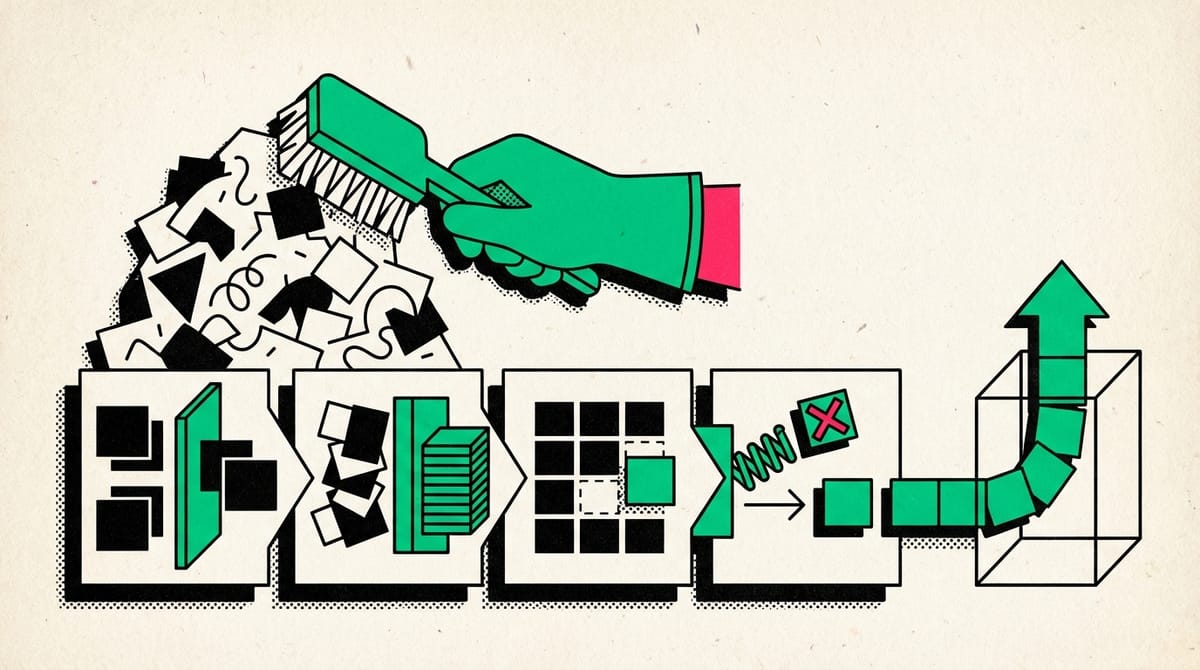

- Scrubbing data is the second phase of the OSEMN framework, focusing on cleaning and preparing data for analysis.

- The scrubbing process transforms raw, dirty data into clean, usable data.

- Four main tasks in data scrubbing:

- Removing duplicates

- Formatting records

- Solving for missing values

- Checking records for mistakes or wrong values

- Clean data is essential for accurate analysis and reliable conclusions.

- Dirty data can lead to errors in exploration, analysis, and decision-making.

- Taking the time to scrub data thoroughly helps avoid problems in later stages of the data analysis process.

Remove Duplicate Records

- Removing duplicates is a crucial first step in the data scrubbing process to ensure accurate analysis.

- Duplicates can occur due to human error (e.g., multiple data entries) or machine errors (e.g., accidental double scanning).

- Duplicate records can distort analysis results and lead to inaccurate conclusions.

- Identifying duplicates can be done manually or using automated tools like Excel's built-in duplicate finder.

- Before removing duplicates, ensure that the repeated data is indeed an error and not missing information (e.g., missing middle names in records with the same first and last names).

- Removing duplicates is an essential step in preparing data for further analysis and modeling in the OSEMN (Obtain, Scrub, Explore, Model, and iNterpret) framework.

Format Your Records

- Data formatting is crucial for accurate analysis and consistent results.

- Two main aspects of data formatting:

- Ensuring consistent formatting throughout the dataset

- Associating data with the correct data type

- Inconsistent formatting can lead to inaccurate summaries and confuse data analytics tools.

- Examples of formatting issues:

- Location data: Standardizing city names (e.g., New York City, NYC, New York, NY)

- Currency: Converting prices to a single currency for comparison

- Different data types (text, numbers, dates) require specific formatting rules for correct interpretation by software and databases.

- Tools like Excel can be used to update and standardize data formatting.

- Proper formatting enables accurate summarization, analysis, and visualization of data.

- Consistent formatting is essential for calculating statistics like averages, maximums, or identifying trends.

- Data cleaning and formatting are crucial steps in the data analysis process, preparing data for further exploration and analysis.

Handle Missing Values

- Missing values are common in datasets and can occur due to various reasons such as unknown information or machine errors.

- There are two main options for handling missing values:

- Fill in the missing values with indicators like "unknown" or "N/A"

- Remove records with missing values (less preferred option)

- Filling in missing values is generally preferred as it preserves other valuable data in the record and avoids potential bias introduction.

- When filling in missing values, consistency is key. Use the same indicator (e.g., "unknown" or "N/A") throughout the dataset.

- Removing records with missing values can lead to loss of valuable information and potentially introduce bias in the data.

- It's crucial to handle missing values before starting the analysis to ensure accurate results.

- Spreadsheet tools often provide features to easily detect and fill in missing values during the data cleaning process.

- Proper handling of missing values is an essential step in the data cleaning process, contributing to more reliable and accurate analyses.

Check for Wrong Values

- Checking for obviously wrong values is a crucial final step in data scrubbing and cleaning.

- Understanding the context of data collection helps identify incorrect values and avoid flagging correct data as wrong.

- Treat obviously wrong data points similarly to missing values - replace with an error indicator or delete the entire record if necessary.

- Negative values may be correct in some contexts, such as recording returns in a sales database.

- Always consider the data's context and expected range of values when identifying incorrect data points.

- Proper data cleaning and verification ensure a clean dataset for the next phase of analysis in the OSEMN framework.

Summary

- Data scrubbing is crucial for analysis: It prepares your dataset by cleaning and organizing the information.

- Remove duplicates: Identify and eliminate duplicate records to ensure data accuracy.

- Maintain consistent formatting: Ensure all data follows a uniform format and has clear data types.

- Address missing values: Identify missing data and either replace it with placeholders or remove the entire record.

- Correct wrong values: Scan for incorrect data, replace with accurate information when possible, or remove if necessary.

- Use a systematic approach: Following a checklist helps ensure thorough and consistent data scrubbing.

- Data quality impacts analysis: Clean, well-organized data is essential for accurate and reliable analytical results.